One of the main tenets of agile methodology is to begin software testing as early as possible in the development process. This is different from the traditional waterfall approach where testing is executed only after the coding phase. In the traditional phase-by-phase linear approach, coding takes place after one or more project teams have first analyzed and prioritized business requirements, then translated these requirements into numerous specification and design documents during the design phase of the project. Here, test cases — the set of conditions or variables used to determine whether a system under test satisfies requirements— are created manually from specifications, which are later used to created tests used by the QA team. This approach really only works if you know with certainty what it is you're building, who you're building it for, and how to build it. In an increasingly VUCA (Volatile, Uncertain, Complex, and Ambiguous) world, where the speed of business and pace of change are accelerating all the time, the agile approach to development and testing is a more practical way to deal with this uncertainty since it emphasizes just-in-time requirements rather than upfront preparation.

User stories, which are part of the popular Scrum agile process framework, are one of the most widely used requirements techniques used on Agile projects. Scrum is so widely used that many people confuse it with the umbrella-term Agile. As an Agile process framework, Scrum takes a time-boxed, incremental approach to software development and project management by advocating frequent interaction with the business during what are known as Sprints (which are called iterations in other agile frameworks).

The simplest Scrum project team (as shown in Figure 2) is made up of a customer/ business unit stakeholder (known as a Product Owner), the team facilitator (called a ScrumMaster) and the rest of the agile development team. Team members interact frequently with business users, write software based on requirements that they pull from a product backlog (a prioritized list of work that is maintained by the Product Owner) that they then integrate frequently with software written by other team members.

.png)

Overview of the Scrum Agile Process Framework

Writing Test Cases from Acceptance Criteria

Product backlog items (PBIs) on Agile projects represent the work that needs to be done to complete the product/project, which includes software features, bugs, technical work, or knowledge acquisition. Software features are described from the perspective of the customer in the form of user stories, which are high-level descriptions of desired functionality and goals. For every iteration or Sprint, user stories on the Product Backlog are refined and pulled into the Sprint Backlog, where the agile team will agree on the acceptance criteria, proposed solution approach and estimate of effort needed to complete each story. Acceptance criteria determine when a User Story works as planned and when developer can mark the User Story as ‘done.’ Because each Scrum team has its own Definition of Done to assess when a User Story has been completed, it's a good practice for testers to begin writing test cases from acceptance criteria. Many times, while writing test cases, a tester will come across a case that requires changes to be made to acceptance criteria, which can lead to requirement changes and greater quality.

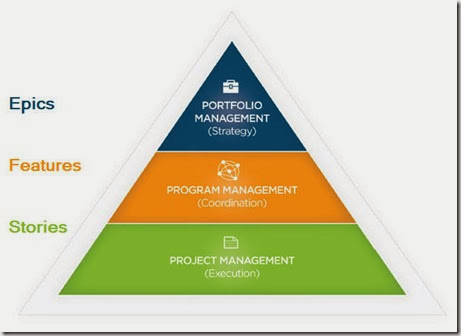

Epics, Features and User Stories

Items on the product backlog such as Epics, Features and User Stories need to be refined or groomed by the Product Owner and other agile team members as they are moved to the Sprint backlog. Epics are high-level features or activities that span Sprints, or even releases. Features are more tangible expressions of functionality, but they're still too general to build. User Stories are functional increments that are specific enough for the team to build.

Figure: Product Backlog from AgileBuddha

For example, the following Epic

— Allow your customers to access and manage their own accounts via the web

can be refined into Features and User Stories that you can test with acceptance tests. (A general recommendation is they should be granular enough to fit in a single iteration).

Features describe what your software does. In this case, the Feature is

— Customer information can be entered and edited in an online shopping-cart application

User Stories are a further refinement that expresses what the user wants to do from his or her perspective. They usually follow a template like this:

As a <type of user>, I want <some desired outcome> so that <some reason>

A User Story for the example above is:

As a customer,

I want to be able to modify my credit card information

so that I can keep it up-to-date.

User Stories Aren't Requirements

It's important to realize that User Stories aren't formal documents in the way traditional requirements are. User stories are more placeholders for conversations among the stakeholders on a project in order to get agreement on acceptance criteria for a particular piece of functionality. One way to encourage this kind of conversation about functionality is via "Three Amigos" meetings, which involve a product owner (or a business analyst), a developer and a QA tester who get together (either face-to-face or on-line) to review the requirements, tests and dependencies of a feature request on the backlog.

This often means that the product owner or an analyst, as a representative of the business, will take the lead and ask a programmer and a tester to look more closely at a feature. In other cases, a programmer or tester may be the person initiating the meeting and setting the agenda. The advantage of Three Amigos meetings is they provide orthogonal views on the feature under discussion: the business representative will explain the business need; the programmer, the implementation details; and the tester offers opinions on what might go wrong. Conversations on Three Amigos meetings don't have to be limited to three people. In some cases, a tester might want advice from a security expert or a programmer may seek recommendations from a database or infrastructure person. The goal of a Three Amigos meeting should be to encourage at least three different viewpoints, as well as some kind of consensus about acceptance criteria. The Amigos may also determine that a feature is not yet ready for further refinement and elect to push its development to another iteration.

What Makes a Good User Story?

User stories should be well-defined before they are moved to the Sprint backlog. One way of doing this is to follow the "3 C's" formula, devised by Ron Jeffries, that captures the components of a User Story:

- Card – stories are traditionally written on notecards, and these cards can be annotated with extra details

- Conversation – details behind the story come out through conversations with the Product Owner

- Confirmation – acceptance tests confirm the story is finished and working as intended

At the same time, it's worth remembering Bill Wake's INVEST mnemonic for agile software projects as a reminder of the characteristics of a high-quality User Story:

| Letter |

Meaning

|

Description

|

|

I

|

Independent

|

The User Story should be self-contained, in a way that there is no inherent dependency on another User Story.

|

|

N

|

Negotiable

|

User Stories, up until they are part of an iteration or Sprint, can always be changed and rewritten.

|

|

V

|

Valuable

|

A User Story must deliver value to the stakeholders.

|

|

E

|

Estimable

|

You must always be able to estimate the size of a User Story.

|

|

S

|

Small

|

User Stories should not be so big as to become impossible to plan/task/prioritize with a certain level of certainty.

|

|

T

|

Testable

|

The User Story or its related description must provide the necessary information to make test development possible.

|

The INVEST Mnemonic for Agile Software Projects

As mentioned earlier, Scrum projects employ fixed-length sprints, each of which usually lasts from one to four weeks, after which potentially shippable code should be ready to be demonstrated. The notion of releasing a prototype, or minimum viable product (MVP), is also important in scrum testing for getting early feedback from your customers. Once the MVP is released, you're then able to get feedback by tracking usage patterns, which is a way to test a product hypothesis with minimal resources right away. Every release going forward can then be measured for how well it converts into the user behaviors you want the release to achieve. The concept of a baseline MVP product that contains just enough features to solve a specific business problem also reduces wasted engineering hours and a tendency for feature creep or 'gold plating' on agile software teams.

A baseline MVP also keeps agile teams from creating too many unnecessary user stories (including acceptance criteria and test cases), which can become a big waste of time and resources. As always on agile projects, the primary purpose of writing user stories and creating test artifacts is to realize business goals and deliver value to stakeholders. This means drafting test cases for a line-of-business enterprise application that will be in use for years should have a higher priority than putting the same effort into a mobile gaming app that might not be mission critical. In the last case, you might be able to get away with using a compatibility checklist approach— combined with exploratory testing— and avoid writing any test cases at all.

Another way to simplify writing test cases is to use behavior-driven development (BDD), which is an extension of test-driven development that encourages collaboration between developers, QA testers and non-technical or business participants on a software project. It focuses on creating a shared understanding of what users require through a structured conversation centered on a business common language and examples. Cucumber Open is the most popular framework to implement BDD in any modern development stack. It includes Gherkin Syntax that defines BDD features in a set of special keywords to give structure and meaning to executable specifications, which is a way of writing down requirements. A BDD feature or user story needs to follow the following structure:

- Describe who is the primary stakeholder of the feature

- What effect the stakeholder wants the feature to have

- What business value the stakeholder will derive from this effect

- Acceptance criteria or scenarios

A brief example of a BDD feature in this format looks like this:

Feature: Multiple site support

Only blog owners can post to a blog, except administrators,

who can post to all blogs.

Background:

Given a global administrator named "Greg"

And a blog named "Greg's anti-tax rants"

And a customer named "Dr. Bill"

And a blog named "Expensive Therapy" owned by "Dr. Bill"

Scenario: Dr. Bill posts to his own blog

Given I am logged in as Dr. Bill

When I try to post to "Expensive Therapy"

Then I should see "Your article was published."

Scenario: Dr. Bill tries to post to somebody else's blog

Given I am logged in as Dr. Bill

When I try to post to "Greg's anti-tax rants"

Then I should see "Hey! That's not your blog!"

Scenario: Greg posts to a client's blog

Given I am logged in as Greg

When I try to post to "Expensive Therapy"

Then I should see "Your article was published."

BDD requires a mindset change in how you write requirements, how you write code, how you write test cases, and how you test code. Having developers and testers use a common business language makes it easier to create a test suite of automated tests since you have direct traceability from requirement to code to test case. These tests cases can be created by automated stubs from acceptance criteria or manually by QA testers during exploratory testing.

A test case might be created as an automated script to verify the functionality per the original acceptance criteria. After doing manual exploratory testing, QA testers might suggest other functionality be added to the application as well as updated test cases be incorporated in the automated test suite.

Creating a Real-Time Requirements Traceability Matrix

One of the outputs of the requirement analysis phase of a traditional waterfall project is a requirements traceability matrix. A requirements traceability matrix is a document that maps each requirement to other work products in the development process such as design components, software modules, test cases, and test results. This is usually implemented in a spreadsheet, word processor table, database, or web page that can quickly become outdated as an application is modified, or additional functionality is added.

Behavior Driven Development, together with test management software, simplifies the process of creating real-time documentation from automated specifications, which can help agile teams better detail which requirements were requested, how they have been implemented, and the set of test cases that verify that implementation.

Explore how SmartBear supports strengthening collaboration, agile testing, and integrating BDD directly to your software release management in Jira with Zephyr Squad Cloud (Free 30-day trial)